Ethical Considerations in AI-Driven Learning: Protecting Privacy, Fairness, and Student Rights

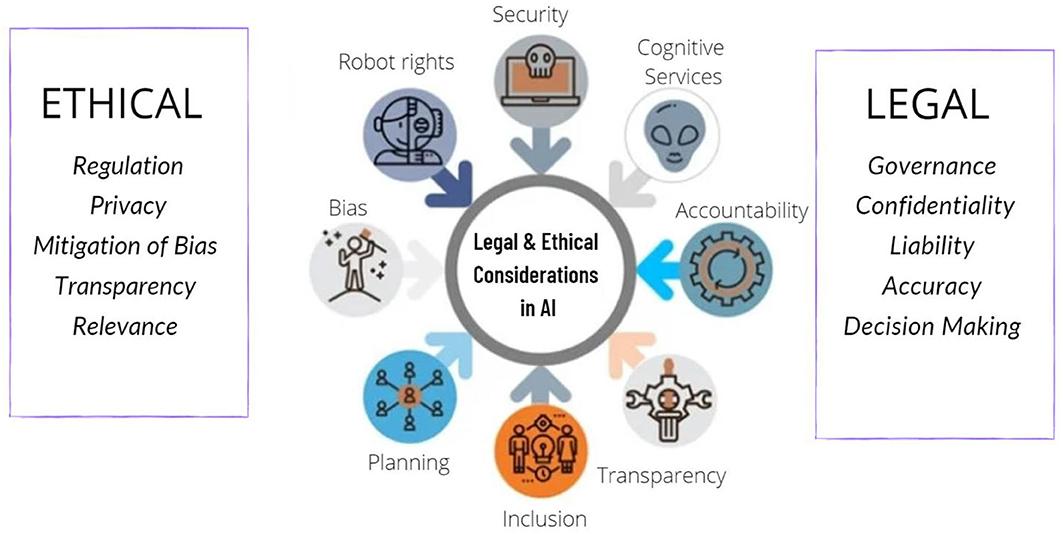

Artificial Intelligence (AI) is reshaping educational landscapes, offering personalized learning, efficient assessment, and data-driven insights. while AI-driven learning promises transformative benefits, it introduces a spectrum of ethical considerations—especially around privacy, fairness, and student rights. Navigating these challenges responsibly is crucial to foster trust, create equitable educational opportunities, and safeguard the future of all learners.

Understanding AI in Education: Opportunities and Challenges

AI-powered tools can adapt lessons for individual needs, automate grading, and predict student performance, creating more engaging and effective learning experiences. However, as educational institutions increasingly adopt these technologies, critical questions arise:

- How is students’ personal data collected and used?

- Are algorithms free from bias?

- What rights do students have over their data and educational outcomes?

Protecting student Privacy in AI-Driven Learning

Student privacy is a core ethical dilemma in AI-driven learning environments. AI systems frequently enough rely on extensive data, ranging from academic performance to social behavior. Ensuring data security and informed consent is paramount.

Key privacy Considerations

- Data Collection Transparency: Clearly communicate what data is collected, how it’s used, and who has access.

- Informed Consent: Obtain explicit permission from students and guardians before gathering data.

- Secure Data Storage: Utilize robust cybersecurity measures to protect sensitive information.

- Regulatory Compliance: Adhere to privacy laws like FERPA and GDPR.

Ensuring Fairness in AI Algorithms

Algorithmic fairness is pivotal in AI-driven education. If AI systems inherit biased data or poorly-designed algorithms, they risk perpetuating inequality.Such as,grading AI might unintentionally favor certain demographics,or predictive tools may underestimate potential in marginalized students.

Common Fairness Challenges

- Bias in Training Data: Past inequalities can seep into AI models, influencing predictions and recommendations.

- Opaque Decision-Making: Students and educators may not understand how algorithms reach conclusions.

- lack of Oversight: Without human review,unfair outcomes may go unaddressed.

Strategies to Promote fairness

- Diverse Data Sets: Train AI on inclusive, representative data to reduce bias.

- Algorithm Auditing: Regularly evaluate models for disproportionate impact on groups.

- Human-in-the-Loop Systems: Ensure educators can review and override AI decisions.

Upholding Student Rights in AI-Powered Education

Student rights must remain at the heart of AI integration in learning. learners deserve autonomy, dignity, and ownership over their educational data and experiences.

Student Rights to Prioritize

- Access to Information: Students must know what data is held about them and how algorithms affect their learning.

- Right to Challenge Decisions: Provide processes for students to appeal AI-generated grades or recommendations.

- participation in Governance: Involve students and families in policy decisions about AI use.

- Protecting Vulnerable Learners: Pay special attention to the unique risks faced by students from marginalized backgrounds.

Benefits of Ethical AI-Driven learning

When ethical considerations guide AI deployment in education, the benefits for students and institutions multiply:

- Personalized Learning: Adaptive systems target students’ strengths and weaknesses while respecting their rights.

- reduced Bias: Continuous fairness audits minimize disparities and promote equity.

- Safer Data Practices: Rigorous privacy safeguards protect sensitive student information.

- Higher Trust: Transparent AI processes encourage stakeholder confidence and engagement.

Case Study: Ethical AI Implementation in a High school

Case Study: Greenfield High integrated an AI-powered tutoring platform to support math achievement.To address ethical concerns, the administration:

-

Clearly informed parents and students about data collection and AI functionality.

-

Used anonymized and encrypted data storage, ensuring compliance with FERPA.

-

Conducted regular algorithm audits for fairness and involved faculty in reviewing AI-generated recommendations.

-

Established a student panel to give feedback and propose changes to the system.

The result? Improved academic outcomes without compromising privacy or fairness, and higher student trust in technology-driven initiatives.

Practical tips for Responsible AI Use in Education

- Develop Clear Policies: Draft transparent policies on AI data use, security, and oversight.

- Engage Stakeholders: Include students, teachers, and families in decision-making.

- Invest in Training: Educate staff about AI ethics, privacy laws, and algorithmic bias.

- Monitor and Review: Continuously assess the impact of AI tools and update practices as needed.

- Use Explainable AI: Favor systems that make their decisions understandable to non-experts.

Conclusion: Building the Future of Ethical AI-Driven Learning

AI in education represents a powerful force for innovation and equity. However, its success depends on thoughtful attention to ethical considerations—notably around privacy, fairness, and student rights. By fostering transparent, inclusive, and responsible AI practices, educators and technologists can build learning environments that enhance opportunities for all students while safeguarding their dignity and future.

Ethical AI-driven learning isn’t just about algorithms—it’s about trust, empowerment, and the fundamental rights of every learner. Let’s shape the future of education together, with ethics as our compass.