Ethical Considerations in AI-Driven Learning: Safeguarding Privacy, Fairness, and Student Well-being

AI-driven learning is revolutionizing educational environments, creating opportunities for personalized instruction and innovative teaching strategies. however, as artificial intelligence becomes more integrated into classrooms and e-learning platforms, ethical considerations—notably privacy, fairness, and student well-being—must take center stage. This comprehensive guide explores the key ethical challenges, benefits, real-world case studies, and practical tips for educators and policymakers to ensure AI in education remains safe and equitable.

Introduction: The Rise of AI in Education

Artificial Intelligence (AI) in education is no longer a futuristic concept. Today, AI-driven learning platforms can assess student progress, predict learning outcomes, personalize assignments, and even provide real-time feedback. Terms like AI-powered education, machine learning in classrooms, and adaptive learning technologies are reshaping how students learn and teachers instruct.

While the advantages are compelling, the ethical implications of deploying these technologies are expansive. Today’s educators and administrators must prioritize topics such as data privacy in AI-driven learning, algorithmic fairness, and student well-being protection to create safe, inclusive learning environments.

Why Ethical Considerations Matter in AI-Driven Learning

- Data Sensitivity: AI systems rely on personal and academic student data, which might potentially be vulnerable to breaches or misuse.

- Bias & Fairness: Algorithms can perpetuate or even amplify biases, impacting grading, admissions, and recommendations.

- Well-being: Automated learning experiences affect student motivation, stress levels, and mental health.

- Legal Compliance: Schools must account for GDPR,FERPA,and other data protection regulations.

Safeguarding Student Privacy in AI-Driven Education

Types of Data Collected

AI-driven learning platforms gather a variety of data, including:

- Academic records and performance metrics

- Behavioral data (e.g., attendance, time spent on tasks)

- Personal identifiers

- Device and location data

Challenges to Data Privacy

- Data Breaches: Centralized data storage poses hacking risks.

- Lack of Clarity: Students and parents may not fully understand how data is used.

- Unintended Sharing: Third-party integrations and vendors could access sensitive data.

Best Practices for Ensuring Privacy

- Clear Consent Forms: Communicate what data is collected and why.

- Robust Security: Utilize strong encryption and regular audits.

- Role-based Access: Limit data exposure to authorized personnel only.

- Data Minimization: Collect only essential facts for AI algorithms.

- Compliance: Adhere to GDPR, COPPA, FERPA, and local legislation.

Promoting Fairness: Ensuring Equity in AI-Driven Learning

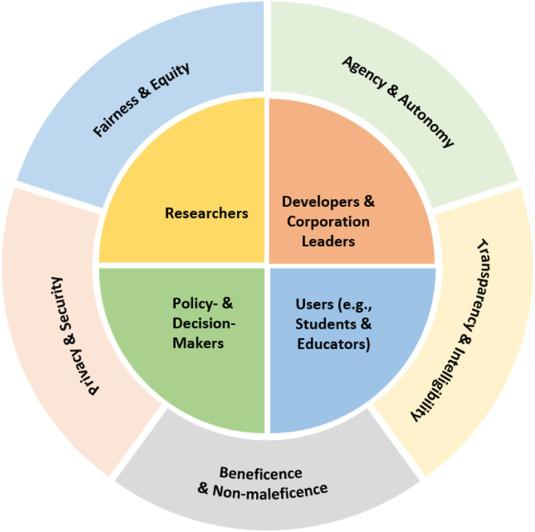

Algorithmic bias in education can lead to unfair disadvantages for underrepresented or marginalized student groups. Ethical AI deployment in education must address these challenges proactively.

Sources of Inequity

- Training data reflecting societal biases

- Lack of diverse voices in AI system design

- Algorithmic opacity and “black box” models

practical Strategies for Fair AI

- Diverse Data Sets: Use data reflecting all student populations.

- Bias Audits: Regularly test algorithms for discriminatory outcomes.

- Human Oversight: Ensure educators review AI-generated recommendations or grades.

- Transparency: Explain how decisions are made and allow appeals.

- Stakeholder Involvement: Invite feedback from students, parents, and teachers.

Safeguarding Student Well-being in AI-Driven Learning Environments

While AI can personalize and optimize learning, ethical implementation must also safeguard the psychological and emotional wellness of students.

Potential Risks

- Dependence on automated feedback diminishing teacher-student relationships

- stress from constant monitoring and performance tracking

- Social isolation in digital-only environments

- Increased anxiety from adaptive testing or game-based learning

Supporting Student Well-being

- Opt-out Options: Offer alternatives to AI-based activities for students who prefer human-led guidance.

- Empathy-focused Design: Integrate features that promote positive reinforcement and casual check-ins.

- Continuous Monitoring: Use AI to identify struggling students early—but complement with human intervention.

- Training Educators: Empower teachers with resources to manage technology-related stress and maintain student trust.

- Digital Literacy: Teach students about AI tools and how to engage safely and responsibly.

Benefits of Ethically-Designed AI in Education

- Personalized Learning: Adaptive systems cater to diverse learning styles and needs.

- Reducing Achievement Gaps: Fair algorithms can help level the playing field for marginalized groups.

- Proactive Well-being Support: AI can flag early signs of disengagement or distress.

- Data-Driven Decision Making: Educators gain actionable insights to improve teaching strategies.

- Efficient Management: Automate repetitive tasks while keeping ethical checks in place.

Case Studies: AI Ethics in Practice

Case Study 1: protecting Student Privacy in a Virtual School

A North American online school implemented an AI-driven attendance and performance monitoring system. To ensure data privacy:

- Implemented multi-factor authentication for data access.

- Published a transparent privacy policy and conducted parent workshops.

- Allowed students to review and request deletion of their data.

Case Study 2: Addressing Bias in algorithmic Grading

A UK-based university piloted an AI grading tool for online exams. Post-launch, analysis revealed inconsistent grading patterns for international students. The institution intervened by:

- Retraining the model with more representative data.

- Offering manual grading as an option for flagged cases.

- Creating a student feedback channel to report perceived bias.

Case study 3: Enhancing Student Well-being

An Australian district used AI chatbots for homework help. Concerned about student anxiety due to 24/7 monitoring, they:

- Limited chatbot availability to school hours.

- Provided well-being resources alongside academic tools.

- Trained educators to spot signs of stress and intervene.

Practical Tips for ethical AI-Driven Learning Implementation

- Audit Regularly: Schedule periodic reviews of AI systems for privacy, fairness, and well-being risks.

- Engage Stakeholders: Include students, parents, and community leaders in decision making.

- Invest in Training: Equip staff with digital ethics literacy and AI system know-how.

- Build Transparent Policies: Publicly share how AI is used and how ethical concerns are handled.

- Pilot Before Scaling: Test new tools with small groups and iterate based on feedback.

conclusion: Shaping the Future of Responsible AI in education

The convergence of AI and education offers tremendous promise, but it comes with profound responsibilities. By foregrounding privacy protection, algorithmic fairness, and student well-being in every decision, educators, administrators, and tech providers can foster secure, equitable, and supportive learning environments.

As AI-driven learning platforms evolve, ongoing dialog between technologists, teachers, students, and communities will be essential. Ethical principles must guide innovation, ensuring that no student is left behind and everyone’s rights and dignity are respected. by adopting the best practices and tips outlined here, schools can harness the full power of AI for education—safely and responsibly.