Ethical Considerations in AI-Driven Learning: Safeguarding Student Rights and fairness

Artificial intelligence (AI) is transforming education at an unprecedented pace, unlocking personalized learning experiences, automating administrative tasks, and empowering teachers to better understand their students’ needs. However, as AI-driven learning tools become more prevalent in classrooms and online platforms, educators, administrators, and developers must address major ethical considerations. Ensuring student rights, privacy, and fairness remains paramount to unlocking the full potential of educational technology. This article explores the ethical considerations in AI-driven learning, offers practical tips, and presents case studies to illustrate best practices for safeguarding student rights and fairness.

Understanding AI in Education

AI-driven learning refers to the use of artificial intelligence algorithms and tools in educational settings to facilitate personalized instruction, adaptive testing, and automated feedback. Examples include:

- Intelligent tutoring systems

- AI-powered grading platforms

- Learning analytics dashboards

- Language learning apps with adaptive features

The main benefits are:

- Personalization – Adapting content to individual learning styles and paces.

- Efficiency – Automating routine tasks to free educators for real teaching.

- data-driven insights – Measuring student progress and identifying areas needing support.

While these advances enhance learning outcomes, they raise critical questions around data privacy, algorithmic bias, and transparency.

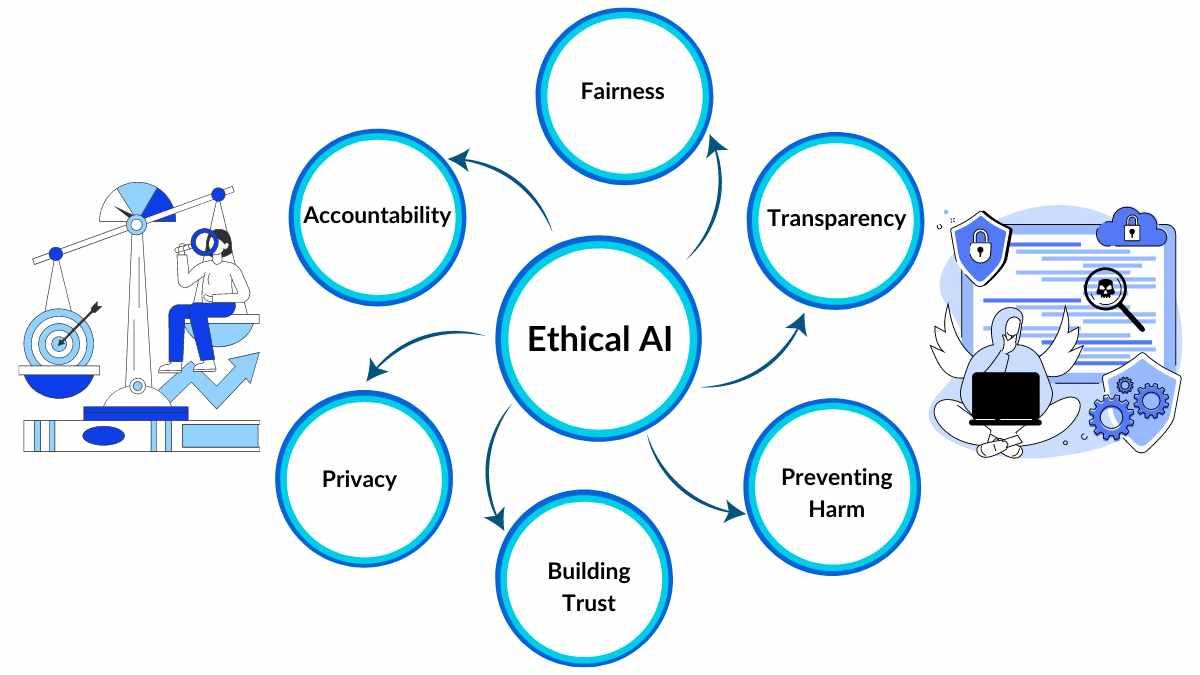

Key Ethical Considerations in AI-Driven Learning

1. Student Data Privacy and Confidentiality

AI models frequently enough rely on vast amounts of student data,from grades and behavioral trends to personal identifiers. To safeguard privacy:

- Limit Data Collection: Only collect data essential for the intended educational goal.

- Secure Storage: Store data using strong encryption and restrict access to authorized parties.

- Clear Policies: Disclose to students and parents what data are being collected and how they’ll be used.

Compliance with regulations like FERPA (Family Educational Rights and Privacy Act) or GDPR (General Data Protection Regulation) is critical when handling student information.

2. Fairness and Algorithmic Bias

AI-based educational platforms must ensure equitable treatment and avoid perpetuating biases. Risks include:

- Training Data Bias: AI systems trained on imbalanced datasets may favor certain demographics, causing unfair assessment or recommendations.

- Discriminatory Predictions: Algorithms may, unintentionally, recommend advanced courses or interventions less often to minorities or students with disabilities.

- Lack of diverse input: AI designers’ own biases can influence the system’s outcomes.

Mitigation strategies include regular audits,involving diverse stakeholders,and making algorithms more transparent.

3. Transparency and Explainability

Students and educators have a right to understand how AI-based decisions are made.Transparent systems promote trust and fairness:

- Explainable Models: Use AI techniques that allow stakeholders to understand the reasoning behind decisions.

- Clear communication: Platforms should provide easy-to-understand documentation about their operation.

- Feedback Mechanisms: Allow users to contest or discuss automated decisions.

4. Consent and Autonomy

Students should have agency over the use of AI systems affecting their learning:

- Informed Consent: clearly inform students (or guardians) when AI is used and explain its effects.

- Opt-Out Options: Allow learners to opt-out of certain AI-driven processes if desired.

5. Accountability and Oversight

Educational institutions and technology providers must establish clear lines of responsibility:

- Human Oversight: Educators should remain actively involved in interpreting AI suggestions.

- Regular Audits: Continuously review AI outcomes to identify and correct errors or biases.

- Ethical Frameworks: Adopt ethical guidelines and collaborate with experts to govern technology deployment.

Benefits of Ethical AI Implementation in Learning

Balancing innovation with ethics ensures long-term success and positive experiences for all learners. Key benefits include:

- Increased Trust: Students and parents are more likely to embrace AI tools when privacy and fairness are prioritized.

- Improved Outcomes: Ethical design helps to maximize learning while minimizing risks of harm or exclusion.

- Regulatory Compliance: Proactively addressing ethical issues can improve compliance and protect the institution’s reputation.

- Diversity and Inclusion: Fair algorithms empower marginalized groups and enable personalized support for every student.

Case Studies: Ethical Challenges and Solutions

Case Study 1: Addressing Bias in Automated Grading Systems

A large university adopted an AI-powered grading system to assist with standardized essay evaluations. However, the system’s accuracy for non-native English speakers was questioned. After reviewing training data, the team discovered linguistic biases. The university responded by:

- Re-training algorithms with a more diverse set of essays.

- Adding human review for flagged cases.

- Creating guidelines for continual betterment and transparency.

Case Study 2: Protecting Student Privacy in Online Learning platforms

An EdTech startup collecting student engagement metrics for personalized feedback faced criticism over unclear data usage policies. In response, they:

- Revamped their privacy notice to specify what was collected and why.

- Added opt-in and opt-out features for data collection.

- Enhanced security protocols and routinely informed users about any changes.

Case Study 3: Incorporating Transparency in AI Recommendations

A school district began using an AI tool to recommend advanced coursework to students. Parents and teachers requested explanations for recommendations.The district partnered with the vendor to:

- Offer simple explanations for each recommendation.

- Enable teacher oversight before final course placement.

- Develop a feedback portal for students and parents.

Practical Tips for Educators and Developers

For educators and technology developers aiming to integrate AI in education responsibly, consider:

- Start Small: pilot new AI tools with a small, diverse group and gather feedback.

- Engage Stakeholders: Include students, parents, and teachers in technology selection and evaluation processes.

- Prioritize Data Protection: Use best-in-class security protocols and be transparent about policies.

- Audit for Bias: routinely check models for skewed outcomes and retrain as needed.

- Educate the Community: Offer workshops on AI literacy and ethical digital citizenship.

First-Hand Experience: Why Ethics Matters in AI-Learning Tools

“During our universityS digital conversion, we realized that students were concerned about how their data would be used,” shares Dr. alicia Romero, an instructional designer. “transparency and open conversation built trust—and allowed us to co-design solutions that put student rights first. Our approach resulted in higher engagement and fewer complaints.”

Conclusion: Moving Forward with Responsible AI in Education

The rapid adoption of AI-driven learning calls for vigilance regarding ethical considerations like student privacy, fairness, transparency, consent, and accountability. By centering student rights and promoting fairness, educators and developers can ensure technological innovation sparks positive change without unintended harm.

- transparency, inclusivity, and continual oversight are essential to safeguarding students.

- Appointing dedicated teams for ethical review ensures ongoing improvement.

- A commitment to ethical AI practices enhances trust—and ultimately, academic success.

By adopting a responsible and ethically informed strategy for AI-driven learning,educational institutions can balance innovation with protection—empowering every student to succeed in a fair and respectful surroundings.