Ethical Considerations of AI in Education: Key Challenges and Responsible Solutions

Artificial Intelligence (AI) has rapidly transformed the educational landscape,offering innovations in personalized learning,automated grading,and student support. However, this technological leap brings forth a host of ethical considerations in AI in education that educators, policymakers, and technologists must address. In this extensive guide, we’ll delve into the key challenges, responsible solutions, and best practices for ensuring the ethical use of AI in the classroom. Whether you’re an educator, administrator, or tech enthusiast, understanding the broader implications and responsible strategies for AI integration is crucial to building a fair and inclusive future for education.

Benefits of AI in Education

Before examining the ethical dimensions, it’s important to acknowledge the benefits of AI in education, which make its adoption so appealing:

- Personalized learning: Adapts content and pace to individual student needs, perhaps bridging achievement gaps.

- Efficient administration: Automates tasks like grading, scheduling, and student monitoring, letting educators focus on teaching.

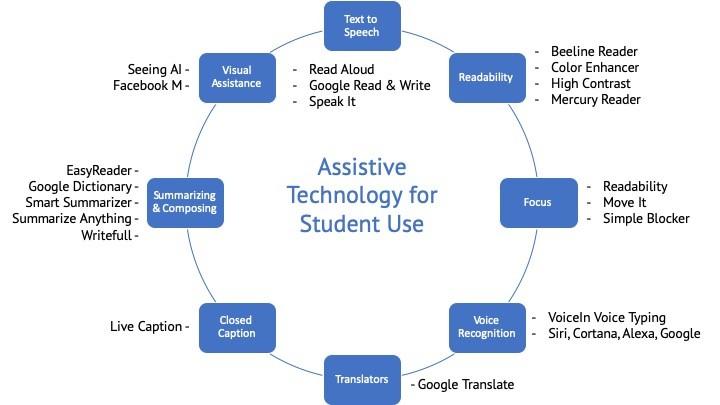

- Enhanced accessibility: Assists students with disabilities using speech-to-text, real-time translation, and visual aids.

- Data-driven insights: Helps identify struggling students early and tailor interventions.

Despite these advantages, without clear ethical guidelines, AI can amplify existing inequalities and undermine trust in education systems.

Key Ethical Challenges of AI in Education

Integrating AI into education is not without significant ethical hurdles. The most pressing ethical considerations for AI in education include:

1. Data Privacy and Security

AI systems require large datasets to function effectively. However,collecting and storing sensitive facts about students brings risks regarding data privacy in education. questions arise such as:

- How is student data collected, stored, and used?

- Who owns the data—the student, the institution, or the tech provider?

- Are there robust safeguards against data breaches or misuse?

Without proper controls, private student information could be exposed, leading to identity theft or misuse by third parties.

2. Algorithmic Bias and Fairness

AI algorithms can unintentionally reinforce social biases present in their training data, resulting in unfair outcomes. In an educational context, this can mean:

- Certain groups of students may receive lower grades due to biased training data.

- Recommendations and interventions may favor some students over others.

- Reinforcement of existing inequalities in learning opportunities.

Addressing algorithmic bias in education is vital to prevent discrimination and promote equality.

3. Clarity and Explainability

Many AI systems, especially those using deep learning, are “black boxes”—their decision-making processes are not easily understood. This lack of transparency raises concerns such as:

- How do AI systems arrive at grading or advice decisions?

- Can educators or students challenge or review these automated decisions?

Transparency in educational AI is crucial for accountability and building trust among stakeholders.

4. Student Autonomy and Human Oversight

There’s a risk that over-reliance on AI could undermine the role of teachers and diminish student autonomy. Key issues include:

- Students may feel disempowered by AI-derived feedback or tracking.

- Teachers may defer too much to algorithms, impacting critical pedagogical judgment.

Balancing AI-driven support with meaningful human oversight in education is essential.

5. accessibility and Digital Divide

Not all students and schools have equal access to advanced technology. Widespread AI adoption could unintentionally worsen the digital divide in education if not implemented inclusively.

Responsible Solutions for Ethical AI in Education

To effectively address these challenges, educational institutions, technology providers, and policymakers must adopt a set of responsible and actionable solutions:

1. Strengthening Data Privacy Regulations

- Adopt frameworks like GDPR (General Data Protection Regulation) to guide the collection and processing of student data.

- Implement clear data usage policies and ensure informed consent from students and parents.

- Regularly audit AI systems for compliance and security vulnerabilities.

2. Auditing for Algorithmic bias

- Use diverse and representative datasets to train AI models.

- Regularly test and review AI outputs for indications of bias or discrimination.

- Engage self-reliant experts to conduct bias audits.

3. Promoting Transparency and Explainability

- Adopt “Explainable AI” models where possible, allowing stakeholders to understand, question, and challenge outputs.

- Prepare clear documentation about how algorithms function, their limitations, and intended uses.

- Provide educators and students with accessible explanations and recourse options.

4. Ensuring Human Oversight

- Maintain teachers’ authority to override AI recommendations where appropriate.

- Train educators to critically evaluate AI-driven insights and use them as one input among many.

- Empower students to understand and negotiate the role of AI in their learning journey.

5. Bridging the Digital Divide

- Invest in equitable access to digital infrastructure, especially in underserved communities.

- Design AI educational tools that function offline or in low-resource settings.

- Collaborate with local stakeholders to ensure inclusivity and address specific needs.

Best Practices for Ethical AI Implementation in Education

- Stakeholder Involvement: Actively involve educators, students, policymakers, and parents in the design and deployment of educational AI.

- Continuous Training: Provide ongoing professional advancement on AI ethics for educators and administrators.

- Transparency Reporting: Publish regular reports on AI performance, impacts, and any incidents or concerns.

- Iterative Improvement: View AI as a tool requiring continual monitoring, evaluation, and refinement.

Case Study: AI-Powered Tutoring & Data Privacy

Consider a school implementing an AI-powered tutoring platform to provide personalized study plans. While initial results show improved student engagement, concerns about data privacy surface—parents want assurances that their children’s data isn’t being sold or misused.in response, the school:

- Requires vendors to adhere to strict data protection standards and regular audits.

- Provides clear information to families about what data is collected and why.

- Enables opt-out mechanisms for students and parents who prefer not to participate.

The result is sustained improvement in learning outcomes while maintaining trust through ethical use of AI in education.

Practical Tips for Educators and Institutions

- Review and vet any AI-powered educational technology for ethical considerations before adoption.

- Establish a cross-functional ethics committee to oversee AI implementation and address emerging issues.

- Educate students on the basics of how AI works and its limitations, fostering digital literacy and critical thinking.

- Encourage open dialog about benefits, risks, and experiences with AI to ensure continual improvement.

Conclusion: Building an Ethical Foundation for AI in Education

As AI becomes an integral part of modern education,ethical vigilance is more critically important then ever. By addressing issues like data privacy,algorithmic bias,and transparency—and by adopting responsible solutions—educators and institutions can harness AI’s benefits without sacrificing trust or equity. Thoughtful, inclusive, and transparent practices will not only protect students but also lay the groundwork for an educational environment where technological innovation and ethical obligation go hand in hand.

Staying informed and proactive is the key to successfully navigating the evolving landscape of AI ethics in education. If you’re involved in educational technology,now is the time to champion responsible solutions and create lasting positive change.